State Vector Machines

Intuition

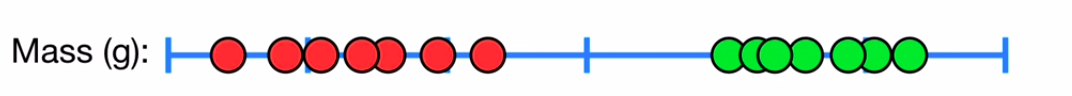

Classification is essentially finding a boundary that separates the data points into two segments so that when we get a new data point, the segment that it falls on determines the label it belongs to! The real challenge is positioning this boundary → which is the focus of every classifier. Let’s take the figure shown below as an example

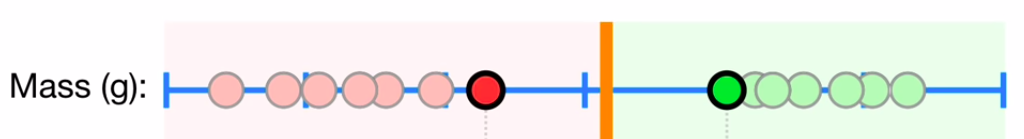

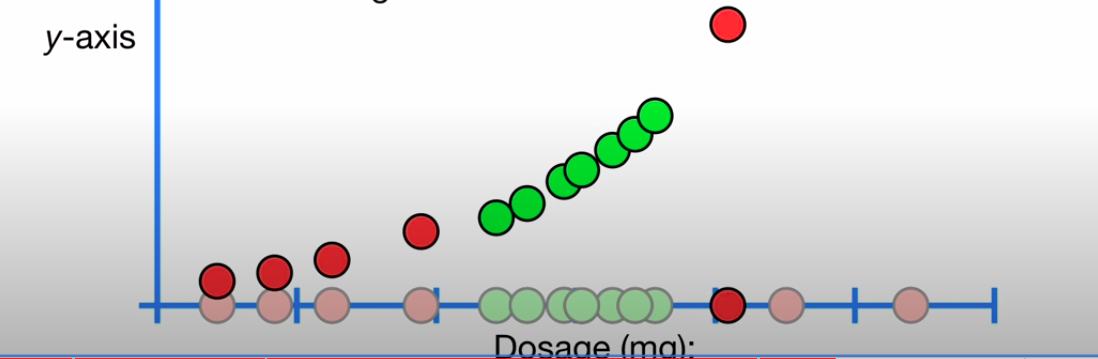

Here, the data is 1D - Mass(g) - and we need to classify the person as Obese (Green) or Not Obese (Red). If we put the decision boundary at the edge of the red points, as shown below, our classifier would work for points inside the respective clusters, but for a point at the boundary, as shown, it would classify it as not obese even though this point is closer to the red cluster.

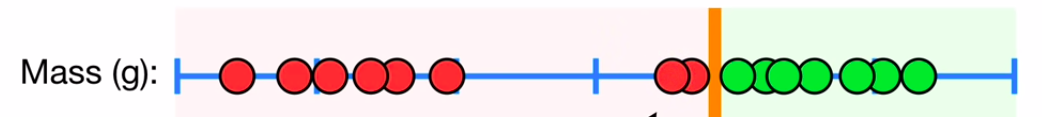

This is clearly not desirable, and this would also be the case if we move this boundary very close to the green points. Let’s define the shortest distance between the observations and the boundary as the Margin. One way to get a decent classifier would be to place this boundary in such a way so that the margins are equal → This is possible in the case where we put the boundary at exactly half the shortest distance between the data-sets, as shown below

This is called the Maximum Margin Classifier since the margins, in this case, have the largest value than any other possible case. But the shortfall of this is that it is sensitive to outliers:

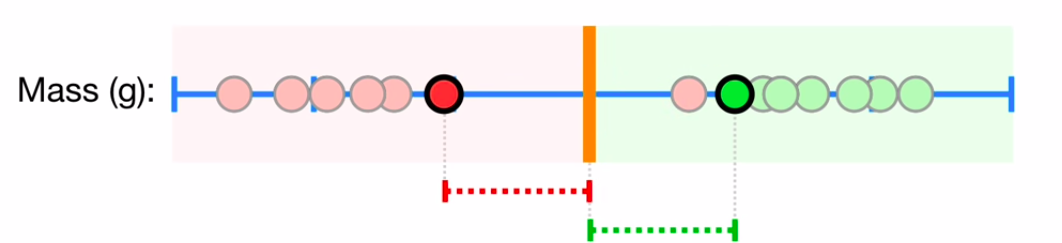

Here, the outliers push the Max Margins very close to the green cluster and thus, overfit the boundary to the training set as clearly a more equally distributed test set would have to make some misclassifications! Thus, to make a boundary that is more robust to these outliers, we must allow misclassifications by introducing soft margins around our boundary that signify regions in which misclassifications are allowed:

Thus, any red data point within this soft margin that falls in the green zone would be classified as green while the data in the red zone would be classified as red. This soft margin, in contrast to the margin above, need not be the shortest distance from the data to the boundary but can be tuned through cross-validation. This classifier is called a Support Vector Classifier → The observations within the soft margins are called support vectors which can be tuned. In 2D, the boundary would be a line, in 3D a plane → In general, it is called a hyperplane.

Non-linearly seperable data

Now, let us look at new case of drug dosage classification, where the dosage is only safe within a certain range of values and unsafe outside it:

Here, the data is not linearly separable since no single boundary can separate it. Thus, support vector classifiers fail here! One way to tackle this is by transforming this data into a higher dimensional plane. If we square each data point and look at the 2D data, then we see that it is now linearly separable!

Now, we can create a boundary in this new higher dimensional plane and just map that back to our original plane. This is called a Support Vector Machine. The transformation we performed on the data is called a Kernel, and in this case, the Kernel is a \(d^n\) kernel with n having the value of 2 → Polynomial kernel.Technically, the support vector classifier is also an SVM in is dimension.

Math of SVM

Hard Margin SVM

For a set of training data \(\{x_i, y_i\}\), with \(x \in \mathbb{R}^M\) and \(y \in \{-1,+1\}\) with the classifications being -1 and + 1 and \(i = 1, ...., N\) , let us apply a transformation first

\[\phi: \mathbb{R}^M \rightarrow \mathbb{R}^D \,\,\, s.t. \,\,\, \phi(x) \in \mathbb{R}^D\]Our objective is to fit a line through this data, and so our model is

\[\hat{y}(\mathbf{x}) = \mathbf{w}^T \phi(\mathbf{x}) + w_0\]which implies that classifications are:

- \(y_i(\mathbf{w}^T\phi(x_i) + w_0 ) > 0\) if the classification is correct

- \(y_i(\mathbf{w}^T\phi(x_i) + w_0 ) < 0\) if the classification is incorrect.

The distance of any training sample from the seperation line line is → \(d(\phi(x_i), L) = \frac{y_i}{\mid \mid \mathbf{w}\mid \mid }(\mathbf{w}^T\phi(x_i) + w_0 )\)

Now, our optimization problem is to maximize the minimum distance for each class, which can be formalized as

\[\underset{\mathbf{w}, w_0}{\text{argmax}} M = \underset{\mathbf{w}, w_0}{\text{argmax}} \{\min_i d(\phi(\mathbf{x_i}),L) = \underset{\mathbf{w}, w_0}{\text{argmax}} \frac{1}{\mid \mid \mathbf{w}\mid \mid } \{ \min_i y_i (\mathbf{w}^T\phi(\mathbf{x_i}) + w_0 ) \}\]Now, we add some constraints to our Margin → Set \(M = 1/\mid \mid \mathbf{w}\mid \mid\) , which essentially means that the minimum distance for our problem has been set to 1 so that we only have to worry about \(\frac{1}{\mid \mid w\mid \mid }\). In other words, we need to have:

\[\mid \mathbf{w}^T \phi(\mathbf{x_i})* + w_0\mid = 1\]This is only possible if data points satisfy:

\[y_i(\mathbf{w}^T \phi(\mathbf{x_i}) + w_0 ) \geq 1\]If we look at the problem of maximizing \(1/\mid \mid w\mid \mid\), it is the same as minimizing \(\mid \mid w\mid \mid\) → Let’s add two transformations to it :

- \[\mid \mid w\mid \mid \rightarrow \mid \mid w\mid \mid ^2\]

- \[\mid \mid w\mid \mid ^2 \rightarrow \frac{1}{2}\mid \mid w\mid \mid ^2\]

We see our minimization of \(\mid \mid w\mid \mid\) is still satisfied since minimizing half of its square will still give us the same result and so minimizing \(\frac{1}{2}\mid \mid w\mid \mid ^2\) should be the same as maximizing \(\mid \mid w\mid \mid ^{-1}\), which was out the original problem. Thus, we now have to find:

\[\min_{\mathbf{w},w_0} \frac{1}{2}\mid \mid \mathbf{w}\mid \mid ^2 \,\,\, s.t. \,\,\, y_i(\mathbf{w}^T\phi(\mathbf{x_i}) + w_0 ) \geq 1 \,\,\,\, \forall \,\,\,\, i = 1, ...., N\]This is called the primal form → the form we reached using our intuition. We had a maximization type optimization and we converted it to a minimization problem without messing with our original goal. We now have to solve a constrained minimization problem. We solve this by creating a Langrangian Formulation → a function of the form:

\[L(x,y,\lambda) = f(x,y) - \sum \lambda_i g_i(x,y)\]where f is our optimization target and we put constraints on it in the form of \(g_i\) which are \(\lambda_i\) is the Lagrange multipliers. Our approach to solving this is to differentiate the Lagrangian w.r.t the variables to get critical points

\[\nabla L(x,y,\lambda) = 0 \longrightarrow \nabla f(x,y) = \lambda \nabla g(x,y)\]and substitute it back into \(L\) to get a Dual Formulation. So, formalizing our SVM minimization as a Lagrangian with \(\alpha\) as our multiplier, we get:

\[L(\mathbf{w}, w_0, \mathbf{\alpha}) = \frac{1}{2}\mid \mid \mathbf{w}\mid \mid ^2 - \sum_{i=1}^{N}\alpha_i [y_i(\mathbf{w}^T \phi(\mathbf{x_i}) + w_0 ) - 1]\]To minimize this function, we first differentiate L w.r.t \(\mathbf{w}\) :

\[\begin{aligned} &\partial L/\partial \mathbf{w} = \mathbf{w} - \sum\alpha_iy_i \phi(\mathbf{x_i}) = 0 \\ \implies &\mathbf{w} = \sum\alpha_iy_i \phi(\mathbf{x_i}) \\ \end{aligned}\]Then, we differentiate L w.r.t \(w_0\) as follows:

\[\begin{aligned} & \partial L/\partial w_0 = - \sum\alpha_iy_i = 0\\ \implies &\sum \alpha_iy_i = 0 \\ \end{aligned}\]Then we differentiate w.r.t w :

\[\begin{aligned} & \partial L/\partial \mathbf{w} = \mathbf{w} - \sum\alpha_iy_i\phi(x_i) = 0 \\ \implies & \mathbf{w} = \sum \alpha_iy_i\phi(x_i) \\ \end{aligned}\]We now plug the values into our lagrangian:

\[\begin{aligned} &\frac{1}{2}\mid \mid \mathbf{w}\mid \mid ^2 - \sum_{i=1}^{N}\alpha_i [y_i(\mathbf{w}^T \phi(\mathbf{x_i}) + w_0 ) - 1] \\ &= \frac{1}{2}(\sum\alpha_iy_i \phi(\mathbf{x_i}) \sum\alpha_jy_j\phi(\mathbf{x_j})) - (\sum\alpha_iy_i\phi(\mathbf{x_i}) \sum\alpha_jy_j \phi(\mathbf{x_j})) - (\sum \alpha_iy_iw_0) + \sum \alpha_i \\ \therefore \,\,\, & L(\mathbf{\alpha}) = \sum \alpha_i - \frac{1}{2}\sum_i \sum_j \alpha_i \alpha_j y_i y_j [\phi(\mathbf{x_i}) . \phi(\mathbf{x_j})] \\ \end{aligned}\]The new Expression → \(L(\mathbf{\alpha}) = \sum \alpha_i - \frac{1}{2}\sum_i \sum_j \alpha_i \alpha_j y_i y_j [\phi(\mathbf{x_i}) . \phi(\mathbf{x_j})]\) is the Dual Form of the Maximum Margin Problem. An important thing to notice is that our dual form only depends on the dot products of our data points and thus, we can take advantage of Kernelization to make this independent of the nature of \(\phi\) :

\[L(\mathbf{\alpha}) = \sum \alpha_i - \frac{1}{2}\sum_i \sum_j \alpha_i \alpha_j y_i y_j K(\mathbf{x_i}.\mathbf{x_j})\]And for classifying any new point, all we have to do it replace it in the place of \(x_j\) as shown:

\[\hat{y}_{test} = \sum_{i=1}^{N} \hat{\alpha_i} [y_i(\phi(\mathbf{x_i}).\phi(\mathbf{x_{test}}) + w_0 )]\]and this should satisfy the Karush-Kuhn-tucker conditions:

- \[\alpha_i \geq 0\]

- \[y_i(\mathbf{w}^T\phi(\mathbf{x_i}) + w_0 ) - 1 > 0\]

- \[\alpha_i [y_i(\mathbf{w}^T\phi(\mathbf{x_i}) + w_0 ) - 1] = 0\]

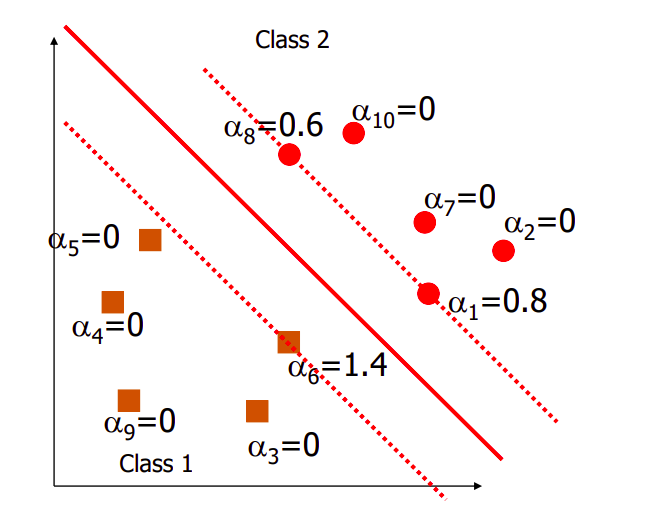

This is the key to SVMs → If \(\alpha = 0\) then the point is not contributing to the margin and is not a support vector and for all \(\alpha > 0\) , the point \(\mathbf{x}\) is a support vector and contributes to the margin.

Thus, in summary, the dual expression gives us a set of \(\alpha_i\) that represent points that are either support vectors or not. If we want to find the best boundary for these hard margins, all we have to do is compute

\[\mathbf{\hat{w}} = \sum \hat{\alpha_i} y_i \phi(\mathbf{x_i})\]Which we then substitute into the third KKT condition, t get \(w_0\) as follows:

\[\begin{aligned} &\alpha_i [y_i(\mathbf{\hat{w}}^T\phi(\mathbf{x_i}) + w_0 ) - 1] = 0 \\ \implies &y_i(\mathbf{\hat{w}}^T\phi(\mathbf{x_i}) + w_0 ) = 1 \\ \end{aligned}\]Now, the y labels are either +1 or -1, and so, \(w_0 = 1 - \mathbf{\hat{w}}^T\phi(\mathbf{x_i})\) or \(w_0 = -1 - \mathbf{\hat{w}}^T\phi(\mathbf{x_i})\) , which we can also write as:

\[w_0 = y_i - \mathbf{\hat{w}}^T\phi(\mathbf{x_i})\]In practice, we compute \(w_0\) by summing multiple such expressions and dividing by \(\alpha_i\). A new point will be classified to

- Class 1 if \(\mathbf{\hat{w}}^T\phi(\mathbf{x_i}) + \hat{w}_0 > 0\)

- Class 2 if \(\mathbf{\hat{w}}^T\phi(\mathbf{x_i}) + \hat{w}_0 < 0\)

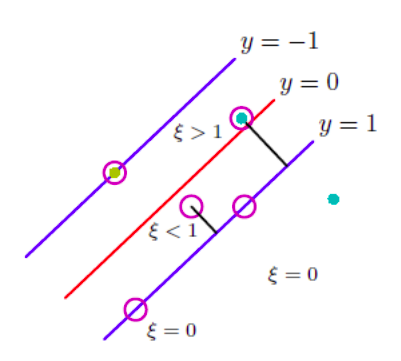

Soft-Margin SVM

The hard-margin constraint is very strong but does not work very well for overlapping classes or spread out data. Thus, we relax the constraints by allowing points to violate the margins → this is quantified by the slack variable \(\xi_i \geq 0\) for each point i, which measures the extent of violation.

Thus, for point outside the margins \(\xi_i = 0\) and for points inside the margin \(\xi_i = \mid y_i - \hat(y(\mathbf{x_i})\mid\), and now, our constraint becomes

\[y_i(\mathbf{w}^T\mathbf{x_i} + w_0 ) \geq 1 - \xi_i\]And so, our problem becomes:

\[\min_{\mathbf{w},w_0, \mathbf{\xi}} C \sum \xi_i + \frac{1}{2} \mid \mid w\mid \mid ^2 \,\,\,\, s.t \,\,\,\, y_i(\mathbf{w}^T\phi(\mathbf{x_i}) + w_0 ) \geq 1 - \xi_i \,\,\,\, \xi_i \geq 0 \,\,\, \forall n\]We again formulate the Lagrangian:

\[L(\mathbf{w}, w_0, \mathbf{\xi}, \mathbf{\alpha}, \mathbf{\lambda}) = = \{\frac{1}{2}\mid \mid \mathbf{w}\mid \mid ^2 + C\sum_i \xi_i \}- \sum_{i=1}^{N}\alpha_i [1 - \xi_i - y_i(\mathbf{w}^T \phi(\mathbf{x_i}) + w_0 )] + \sum_i \lambda_i(-\xi_i)\]Which, when differentiated gives the follownig expresions:

\[\begin{aligned} &\mathbf{w} = \sum\alpha_iy_i \phi(\mathbf{x_i})\\ &\sum \alpha_iy_i = 0 \\ &C - \alpha_i - \lambda_i = 0 \end{aligned}\]The interesting thing is that the third expression helps us eliminate \(\xi_i\) from the Dual form to get the expression:

\[\sum \alpha_i - \frac{1}{2}\sum_i \sum_j \alpha_i \alpha_j y_i y_j \phi(\mathbf(x_i)).\phi(\mathbf(x_j))\]Which is the same as before. Thus, we can see that the only impact \(\xi\) has is in shifting translating the constraint to include misclassifications. Thus, soft-margins allow us to perform the same optimization with an added impact on the classification constraint.